For reasons unknown to me, I have been given a task to generate a voice clone of someone. As a result, one of the things I've been working on is finding high quality videos of him talking. This has led to me raging at my computer, hitting command+t far too many times for a given video.

I thought there has to be something to isolate a single person's vocals. Yet there was nothing online that did this out of the box.

I do understand this is a fairly niche use case, but someone surely has thought of this before, right?

The Core

There is a ton of open source audio software. After an afternoon of research, I ended up finding my cocktail of tools to achieve this end goal.

The entire process goes as such: Assess → Separate → Identify → Condense → Denoise → Normalize → Segment → Score.

All you do is give your anchor/reference audio and then the program goes through, finds where he or she talks, takes it out, ranks the similarities, ranks the qualities, and keeps those that are the best.

How It Works

-

Assess: Analyze the input audio files. We get some basic file information and audio characteristics. I know how the TTS open source models were trained, so I know what I'm looking for in an audio file. We want a certain sample rate, we don't want clipping, we want certain peak levels, etc.

Like you may be thinking—what do some of these criteria mean? Well for example, if the audio is clipping, that means the audio has been recorded too loud and the peaks of the waveform have been cut off. This results in distortion and loss of audio quality, which can make it difficult to accurately isolate vocals. By assessing the audio files first, we can ensure that we're working with high-quality input that will yield better results in the subsequent steps.

-

Separate: Use source separation techniques to isolate vocals from background music and noise. Now, this is where I saw a TON of services. The following steps, where we isolate a certain person's vocals, is what was unfound.

So, there is this really cool thing called DEMUCS. Thank you very much Meta—or I should say the creator, I'll link his repo below. I say this because as the person who created it left Meta, they gave up on the project (classic!).

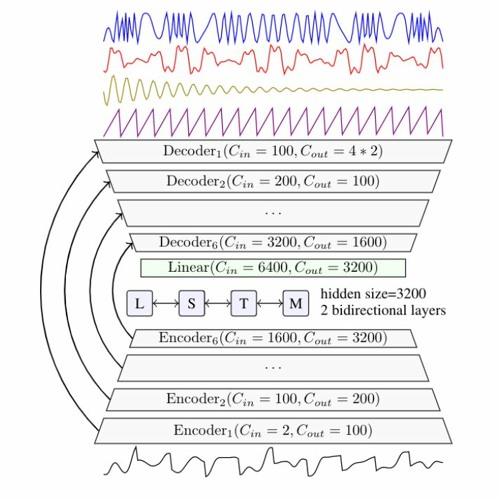

Demucs is a deep learning-based source separation model that can effectively separate vocals from music and other background sounds in an audio file. It uses a neural network architecture to analyze the audio signal and isolate the vocal components. This is a crucial step in the pipeline, as it allows us to focus on the vocals for further processing and analysis.

-

Identify: This is where it gets cool. With our anchor audio segment, we determine a vocal fingerprint. This is an embedding of the audio that captures its unique characteristics. We then compare this fingerprint against the isolated vocals from the previous step to identify segments that match the target voice. Given the anchor embedding and an embedding of a given segment of the clip, we determine the similarity. If it's above threshold X, we keep it for the next step.

-

Condense: Combine multiple segments of identified vocals into a single audio stream.

-

Denoise: Apply noise reduction algorithms to enhance the clarity of the isolated vocals. Thank you open source!

-

Normalize: Adjust the volume levels to ensure consistent loudness throughout the audio. Why does Torch do so much audio?

-

Segment: Split the audio into manageable chunks for easier processing and review. We segment on certain quality metrics.

-

Score: Evaluate the quality of the isolated vocals and rank them based on similarity. For each segment, we get a speech ratio (how much of the time is the guy talking), the peak, the RMS, the crest, time length, etc.

All of this comes together and we get some great clips to use to then send into a TTS open source model to zero-shot clone his voice. I will explain that tech another post.

The Open Source Stack

Without these, I'd be unable to talk about any of this. What took me a day to make would have been years of developement. Thanks opensource.

Demucs

Demucs is the backbone of the separation step. It's a hybrid transformer model that can split audio into stems (vocals, drums, bass, other). The quality is insane for something you can just pip install. A guy (Alexandre Défossez) who was on Meta's research team built it, they've archived it as he left. But Alexandre keeps it alive. Thank you for being a good person, Alexandre.

Silero VAD

VAD stands for Voice Activity Detection. It tells you exactly when someone is speaking in an audio file. It's lightweight and runs on CPU. I use this to know which segments are actually worth processing instead of wasting time on silence or background noise.

Resemblyzer

This is the magic behind the identification step. Resemblyzer generates speaker embeddings—basically a numerical fingerprint of someone's voice. You give it your anchor audio, it spits out an embedding, and then you can compare that against any other audio to see how similar the voices are. It's built on top of a speaker encoder from the "Transfer Learning from Speaker Verification to Multispeaker Text-To-Speech Synthesis" paper. Long name, but all you need to know is it lets me find my target voice in any audio.

DeepFilterNet

DeepFilterNet uses deep learning (shockere) to filter out background noise while preserving speech quality. It's specifically designed for real-time speech enhancement, which means it's fast and doesn't destroy the vocal characteristics I'm trying to preserve. Obviously, I am not doing it real time, but it still works great for this.

pyloudnorm

This handles the normalization step. It implements the ITU-R BS.1770-4 loudness standard (I totally knew what this was before) which is just a fancy way of saying it makes all your audio the same perceived loudness. This is really important when you're stitching together clips from different sources. Without this, you'd have some segments screaming at you and others whispering, which as you may guess, would suck for zero shot voice cloining.

Librosa

The Swiss Army knife of audio analysis in Python. I use it for loading files, computing spectrograms, and pretty much any audio feature extraction I need. It's been around forever and the documentation is solid. If you're doing anything with audio in Python, you're probably using librosa whether you know it or not.

torchaudio

PyTorch's audio library. A lot of the models I'm using (Demucs, Silero) are PyTorch-based, so torchaudio handles the audio I/O and transformations that play nice with the tensor operations. Also has some solid built-in audio processing functions.

Why This Is Cool (For probably Only Me)

This just removes trivial editing. It was so painful to me I would rather spend 100 hours on this, even if I only needed 50 hours total on editing. If anyone knows a software out there that does this, that would be cool, but for now I think I may be the only person on this planet with this issue.